Fact-checking has limited impact on fake news stories in Germany

This post originally appeared as "Germany's Fight Against Fake News: Can It Work?" on the website of the Center for International Media Assistance (cima.ned.org) and is published here with permission. The Center for International Media Assistance (CIMA) is a think tank based in Washington, DC, that works to promote diverse and innovative media systems for an informed public around the world.

The study was part of a collaboration between IREX and Columbia University. Tara Susman-Peña from IREX collaborated with Anya Schiffrin and a team of students at the School of International and Public Affairs at Columbia on the research, which focused on filter bubbles, fake news, and other aspects of the misinformation ecosystem.

The fight against misinformation in media continues to ramp up. We are witnessing an explosion of proposed solutions and approaches in how to best filter “fake news.” Many foundations, NGOs, and tech platforms are putting money into media literacy, fact-checking, and financial support for news outlets as ways to combat the spread of misinformation.

In Europe, citizens and lawmakers are feeling the pressure, as the trifecta of populism, fake news, and threats of Russian interference increase the sense of urgency. Amid worries surrounding upcoming elections in Germany, the country has taken an especially proactive approach in stopping the spread of fake news.

The response to fake news in Germany is an interesting case study as a policy experiment—it is the first time that a government has attempted to hold technology and social network companies responsible for the content shared on their platforms. In January 2017, government officials first stated their intentions to fine Facebook for any fake stories that went viral. On June 26, the German Bundestag officially passed the “Network Enforcement Act,” which goes into effect in October 2017. This law would enforce fines from €5–€50 million on social media companies who fail to take down “obviously illegal” content (which includes defamation, hate speech, or violent rhetoric) within 24 hours.

Unsurprisingly, Facebook has been openly critical of the law. It has been joined by many press freedom and civil rights groups who fear the law could have unintended negative consequences, such as encouraging self-censorship or putting greater pressure on tech companies to preemptively censor any story.

But Facebook has its critics too. In the aftermath of the 2016 US elections, Facebook and other social media networks faced a growing wave of accusations that they had done little to stop the spread of misinformation and may have helped it spread. In part, this is because social media platforms rely heavily on algorithms to determine what users see on their timelines. This means that the “virality” of a post is rewarded with greater visibility, whether the story shared is credible or not.

In response to criticism, in this case from the German government, Facebook increased efforts to provide more content fact-checkers, and internal moderators. They also partnered with the independent German nonprofit investigative group Correctiv for fact-checking. However, an investigation last year by Süddeutsche Zeitung found that Facebook monitors in Germany are working under increasing pressure, with low wages and little training, as they sift through thousands of posts each day. It is inevitable given how quickly a story can grow that monitors may not always catch a misleading or false story quickly enough.

“IM Erika”: Insights on German libel

Oftentimes, unreliable, debunked rumors resurface in social media feeds. In a partnership project between IREX and Columbia University SIPA, we explored different approaches to tackling the problem of misinformation online. Within the project, some of our research focused on a fake news story that has popped up on digital and traditional German media multiple times over the past decade: a libel story accusing Angela Merkel of being a former intelligence officer for the Stasi—the secret police unit of East Germany. The story, dubbed “IM Erika,” has appeared multiple times in alternative publications in the German news landscape, especially during times of elections or national crises. Having resurfaced again in early 2017, it is a prime example of the state of fake news in Germany.

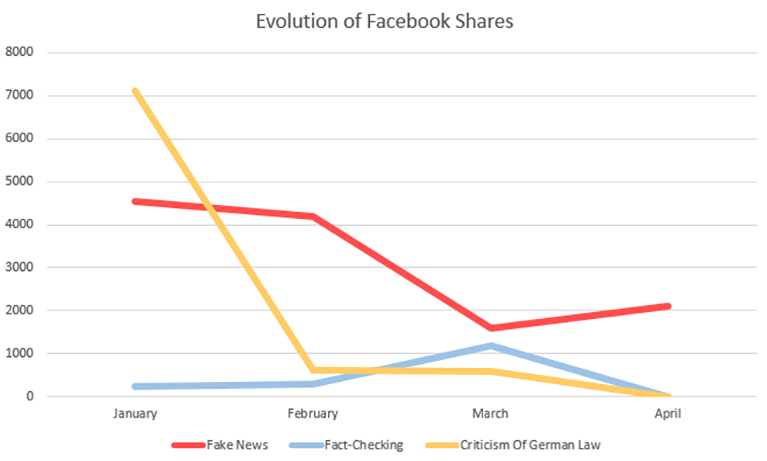

To track the influence of the story, we scraped all posts traceable by Google with the keywords “Merkel” and “Stasi” that originated in Germany from January 2017, when the law was first proposed, until April 2017. We mined the number of times the posts/news stories were shared on Facebook newsfeeds in order to get a sense of their impact. Last, we independently examined the stories to determine their content—namely whether they were fake news stories, fact-checking pieces, or a surprising third category: criticisms of the proposed bill to combat fake news, using the term “Stasi” as a criticism or slander against the chancellor.

The most obvious finding is that the libel persists, and vastly outperforms fact-checking. “IM Erika” stories were shared by a total of 4,550 users in January, dropping down to 2,100 in April. Both numbers show that the story had significant reach—according to estimates, it could have appeared in up to 300,000 newsfeeds. This legitimizes the concern of the German lawmakers, but also pokes a hole in their policy: the story has been debunked multiple times, but nonetheless it persisted. Even if a story is taken off social media quickly, it can reappear again and it is difficult to control who may share a particularly popular piece of fake news. In many cases, even in the face of facts or reputable take-downs, belief in rumors will persist. Germany can certainly fine Facebook for fake news stories, but that does not mean the story disappears from the internet and can’t prevent it from showing up again later.

Our research confirmed concerns raised by Süddeutsche Zeitung and others that fact-checking is not well-run and may not be enough to combat misinformation. Stories that fact-checked “IM Erika” did not perform well in terms of Facebook shares. This may be because of a lack of interest or paid promotion. The fact-checking simply did not draw enough shares to make impact. One proposed solution is rehiring copy editors fired due to cuts by media companies, but by social media platforms instead, to perform the fact-checking.

The search also unveiled something we did not originally anticipate. In January 2017, a number of well-performing posts and opinion pieces used the word “Stasi,” and did not reference the “IM Erika” story. Instead, “Stasi” was used as a criticism of the German law’s seeming effect of monitoring social networks. In East Germany, Statsi were notorious for monitoring the private conversations of individuals, and now critics charged that Merkel’s government was proposing policies that were similarly intrusive. In short, the proposed law caused quite a controversy. And while little can be said with certainty, the finding shows a potential unintended effect of suppression of speech: extremity and polarization. The goal for Germany is to eradicate fake news on social media. Getting rid of the story, though, does not mean you can change the minds of those who shared it. In response to state clampdowns on freedom of speech, some may double down on their beliefs.

Can policy combat misinformation?

Why a story engages with an audience may have just as much to do with the particular political climate, group identity, and other personal factors. There are many reasons fake stories go viral, so it is difficult to pinpoint when to intervene to stop their spread. As our research reveals, focusing on one solution alone may not be enough to disrupt the cycle of fake news.

The particular story tracked here was not new. It is a story that has bounced around online in Germany for years. Its very presence on social media shows the long-standing power of rumors. Often, stories are debunked by multiple sources but continue to live on, pointing out the limitations of fact-checking. The most extreme example of this was with “Pizzagate,” where a fake American story about Hillary Clinton’s involvement in a child-trafficking ring led to a shooting at the supposed site of the crime. Though the story was deleted in November and widely refuted by multiple reliable sources, it still had enough hold on audiences to result in a violent reaction a month later.

Stories with salacious or extreme headlines will, unsurprisingly gain traction quickly, especially when it preys on community fears or prejudices. For example, mainstream outlet Bild published a story about a woman in Frankfurt raped by a mob of men of “middle-eastern” descent that turned out to be false. And in that case, it was published by reputable sources, making it harder for consumers to discern fact from fiction. Occasionally, what makes matters worse may actually be fact-checking—repeating false headlines draws more attention to a story.

Further research on the societal factors that cause a particular story to spread would be valuable. More information is needed on how identity plays into our willingness to believe fact-checking. Group identity, whether political, gender, class, or religion, could seriously impact the effectiveness of fact-checking and explain the persistence of certain stories.

Germany’s new law has already inspired copycat laws, most notably in Russia. The Russian bill, closely mirroring the Network Enforcement Act, goes into effect in 2018. Russian social media networks and human activists are worried that the law will increase media self-censorship. The concerns are valid, given the already rampant crackdown on free speech in Russia. While the media environment in Germany is vastly different, passing the law has already introduced new global norms.

One aspect that is encouraging in our study of “IM Erika” is that the impact of the story did lessen over time, albeit slowly. However, even amid threats of legal consequences and increased fact-checking, the story did not completely disappear. Given the new legislation, it is difficult to predict the long-term outcomes, but cracking down with fines or even fact-checking alone will likely not be enough on their own to combat the way stories spread on social media or the power of rumors and conspiracy theories. Misinformation preceded the internet, and, so far, it seems able to survive it.

Niko Efstathiou is a recent graduate of Columbia University’s School of International and Public Affairs, specializing in technology, media, and communications. Niko graduated from Yale University with a BA in political science and was selected as a 2016 Google News Lab Fellow partnering with WITNESS Media Lab to work on human rights monitoring through citizen video. He reported on the rise of fake news in Davos 2017 and is reporter and contributor for various publications in Greece and abroad.

Bebe Santa-Wood holds a master’s of international affairs in human rights from Columbia’s School of International and Public Affairs. Her research interests include trust in media, gender, and the intersection of journalism and human rights. You can follow her at @bsantawood.